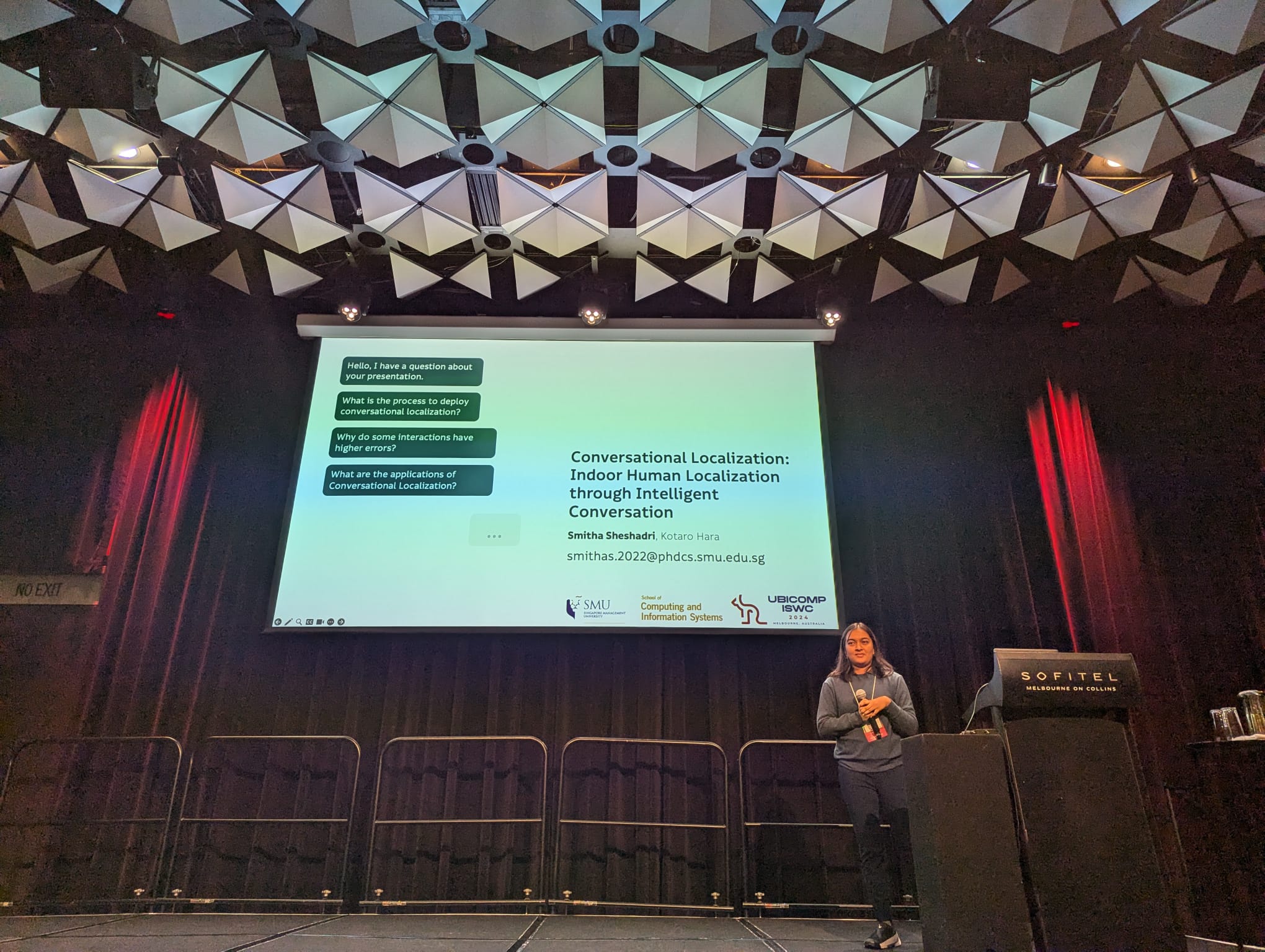

Smitha Sheshadri

PhD Student in Computer Science

Singapore Management University

About Me

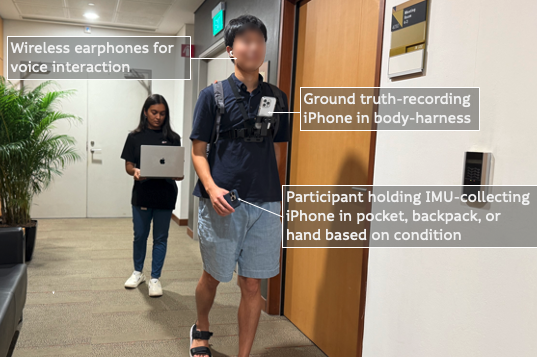

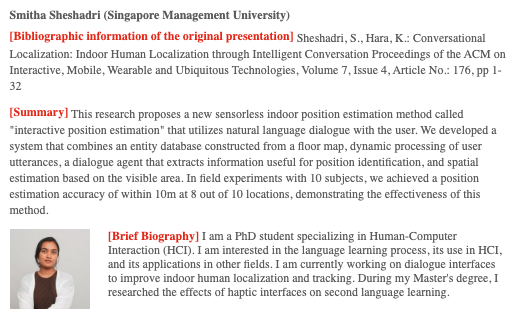

I’m Smitha, a PhD candidate in Human-Computer Interaction at Singapore Management University (SMU). My work focuses on developing solutions for indoor positioning using conversational interaction. I’m especially interested in how natural language, when combined with sensor data and behavioral models, can unlock new possibilities in spatial intelligence, a domain where language is still underused.

My current research explores how conversational input can drive concrete performance improvements in positioning systems for indoor spaces. By integrating natural language interaction into positioning systems, I aim to design low-cost, infrastructure-free approaches that are both practical and human-centered.

Before my PhD, I completed my master’s at the National University of Singapore (NUS), where I investigated how sensorimotor engagement through haptic touchscreen interfaces could enhance second-language vocabulary learning.

Across projects, I specialize in rapid prototyping (Python is my go-to), user-centered design with iterative pilots, and user behavior analysis. Outside research, I’m always up for discovering new food spots and never get tired of rewatching Jurassic Park 🦖.

Interests

- Natural Language & Conversational Interfaces

- User-as-sensor Solutions

- Indoor Spatial Intelligence

- Language Learning

Education

- PhD in Computer Science, 2022 - Present

Singapore Management University (SMU)

- MSc in Computer Science, 2018 - 2020

National University of Singapore (NUS)

- B.Eng in Computer Science and Engineering, 2014 - 2018

Visvesvaraya Technological University, India